Raptor-Test: Difference between revisions

m (→Buy Now) |

(→FAQ) |

||

| (31 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{{Navigation}} [[Raptor-Platform | Raptor Platform]] > '''Raptor-Test''' | {{Navigation}} [[Raptor-Platform | Raptor Platform]] > '''Raptor-Test''' | ||

[[ | [[image:RaptorTest_RaptorPlatform.png|right|550px|thumb]] | ||

=Introduction= | =Introduction= | ||

Raptor-Test | |||

New Eagle has developed an automated testing platform (Raptor-Test) for Motohawk and Raptor™ models that greatly improves the effectiveness and speed of software verification. | |||

===Raptor-Test Video Introduction=== | |||

'''[http://www.neweagle.net/support/wiki/video/NEAT_Intro_2_19_2014_BFH.mp4 Automated Testing Introduction (VIDEO)]''' | |||

===Raptor-Test Powerpoint Introductory=== | |||

'''[[Raptor-Test_Downloads|Raptor-Test Overview (PPT)]]''' | |||

*Facilitates testing of model-based software against requirements through '''[https://www.neweagle.net/support/wiki/index.php?title=Raptor-Test#Simulated_Hardware_In_the_Loop_.28SimHIL.29 simulated hardware-in-the-loop (SimHIL)]''' | |||

*Provides an intuitive graphical PC interface for authoring and executing test scripts over a USB-to-CAN interface | |||

*Encourages accumulation of verification infrastructure over project lifetime, allowing validation effort to scale in a write-once run-many fashion | |||

*Allows for increased confidence in production intent software releases. | |||

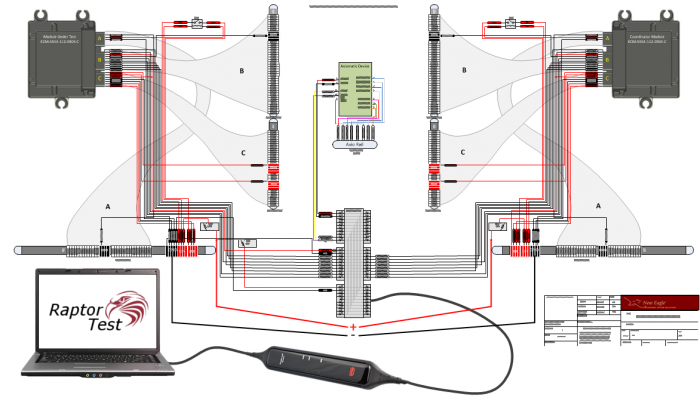

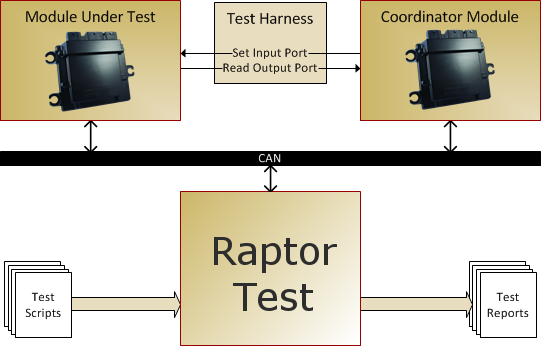

The following figure shows an example of an Automated Test bench design to verify specific software requirements. | |||

[[image:RaptorTest_Layout.png|700px]] | |||

'''Motivation for Raptor-Test''' | |||

''How do you validate your production control software works properly prior to release?'' | |||

''How do you make sure that you don't break something you've already tested when you implement a new feature?'' | |||

''How do you assure that a service patch (bug fix) doesn't cause other issues?'' | |||

Often, in a controls development program the software is validated empirically over time as the controls functionality is developed, debugged and calibrated. In the typical scenario, controls complexity grows over time, initially focusing on basic operation (does it run), and working on finer-grain features later such as diagnostic messaging and cruise control. The cruise control functionality is developed and tested when it is implemented & calibrated - then the team moves on to other features. The problem that is often encountered with this approach is that changes made to the software in later stages can result in defects in previously implemented functionality. Since the prior functionality was 'checked-out' at an earlier time it is assumed good until an issue manifests at a later point, sometimes in a fielded product. Another issue that arises is in making an update to a fielded product, sometimes after some months/years have gone by - will the change cause other issues? ''In the best cases'', successful production programs take a more rigorous approach to production validation usually involving a lengthy written procedure (test plan) describing a manual checkout process executed against the production intent software/system. This procedure can be brought up at a later time and run against a software build to verify things work properly. Often this procedure is actually printed out and executed by an individual or team against the production intent vehicle to validate the controls software release. The individual(s) executing the test plan will work through the plan by driving the vehicle, pulling sensor wires and/or overriding values in MotoTune to exercise features and inject faults, and writing down pass/fail on the test plan. For a sufficiently complex system the execution of this test plan could take many days of focused effort. This is a '''time-consuming, error-prone, and expensive method''' of verifying the function of the production intent software. If you are creating production controls system - does this sound familiar? | |||

[[image:test1.PNG|700px]] | |||

Over the course of a decade of experience with this approach to system validation it never quite felt right. What we found was that there was not a good way to scale our effort in testing the system. As mentioned, a detailed test plan was created ''in the best cases'' but even then it was often relatively high-level, and would require significant effort to execute a lot of manual steps. What we were looking for was a way to test something and reuse our effort in testing it later, accumulating tests over time (building a test suite) so that we could gain increased confidence with the system functionality at each release. We needed a way to automate the manual steps so we could dive deeper in our testing to get better coverage. We needed a way to automate test execution so we could scale our validation development effort, allowing us to '''spend our validation time building the tests - not on running them'''. | |||

[[image:test2.PNG|700px]] | |||

This is why we built New Eagle's automated testing framework, Raptor-Test, which we hope you'll find is an excellent solution to the problem of validating your production controls. | |||

=Downloads= | =Downloads= | ||

| Line 15: | Line 49: | ||

|- | |- | ||

|style = "height:180px; width:180px;"| | |style = "height:180px; width:180px;"| | ||

[[Image:Raptor-Test_MarketingSheet.jpg|150px|link= | [[Image:Raptor-Test_MarketingSheet.jpg|150px|link=https://www.neweagle.net/support/wiki/index.php?title=Raptor-Test_Downloads]] | ||

|style = "height:180px; width:180px;"| | |style = "height:180px; width:180px;"| | ||

[[Image:RaptorTest_UserManual_Screenshot.PNG|150px|link=https://www.neweagle.net/support/wiki/index.php?title=Raptor-Test_Downloads]] | |||

|style = "height:180px; width:180px;"| | |style = "height:180px; width:180px;"| | ||

If you have already purchased a software license, you can download the latest release of the Raptor-Test software at [http://software.neweagle.net/issues/plugin.php?page=Artifacts/index software.neweagle.net]. | If you have already purchased a software license, you can download the latest release of the Raptor-Test software at [http://software.neweagle.net/issues/plugin.php?page=Artifacts/index software.neweagle.net]. | ||

|} | |} | ||

= | = Features = | ||

New Eagle's Raptor-Test tool provides a friendly interface between your PC and the rest of your testing setup, allowing you to set calibrations and overrides inside a running MotoHawk or Raptor model to verify the required software functions. The AT setup configuration can vary depending on the application's testing requirements. For some applications, the only required test components are: a PC to run Raptor-Test, a Kvaser cable for CAN-to-USB connection, and the Test Module. For other applications, Raptor-Test can interface with both a Test Module and a Coordinator Module via CAN to enable simulation of larger and more complex systems. The Coordinator Module typically runs a plant model, transmitting and receiving simulated sensor and actuation signals between the two modules. These signals can be CAN inputs and outputs or analog/discrete inputs and outputs between the modules. Raptor-Test reads the MotoHawk or Raptor probes to verify that the application software in the Test Module functions as required. | |||

[[File:RaptorTest_Architecture.png|550px]] | |||

*Scripted tests for reliable, repeatable testing | |||

*Run from the command line, MATLAB scripts, or with an interactive console. | |||

*Send and receive CAN messages | |||

*Set calibrations inside a running Raptor or MotoHawk model | |||

*Read probes from inside a running Raptor or MotoHawk model | |||

*Simulate behavior of the entire system: | |||

**Set override values for digital and analog inputs | |||

**Connect the unit-under-test to a coordinator module running a plant model of the rest of the system | |||

*Generates reports after each test run: | |||

**PDF reports for easy reading | |||

**XML reports for consumption by other software systems | |||

*Utilize Coordinator Module to send CAN messages | |||

*Verify CAN messages | |||

*Test CAN data time out | |||

*Verify logic (for example: Simulink model/State Flow) in the Test Module | |||

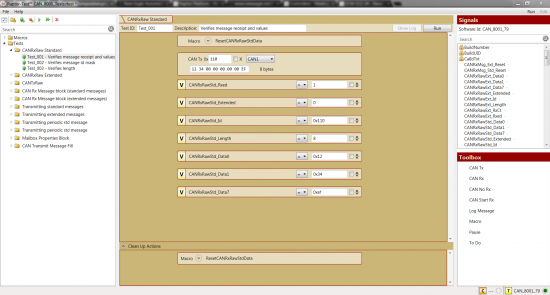

*Easy to use Graphical console for building and executing scripts: | |||

[[File:TestComposition.png|550px]] | |||

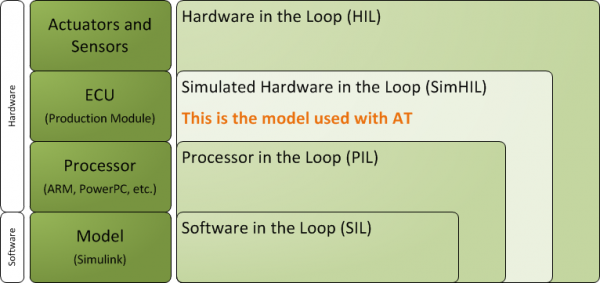

=Simulated Hardware In the Loop (SimHIL)= | |||

Simulated Hardware-in-the-Loop testing (or SimHIL) is a control systems validation strategy using simulated I/O to verify that the software functions match the application requirements. The goal of using a SimHIL approach is to validate software on the production hardware with physical signals to stimulate the module. Simulated I/O can include both CAN transmit and receive as well as analog/discrete inputs and outputs. There are a variety of strategies that can be used to test your software functionality, including setting of software overrides by Raptor-Test over the MotoTune protocol. This allows certain subsystems and states to be methodically triggered and tested. A Coordinator module can be utilized to simulate the analog/discrete I/O or CAN interfaces with other modules in the point of use application. The SimHIL testing setup can be easily tailored to each customer application based on the software requirements. | |||

= | === Difference between 'in-the-loop' Testing === | ||

*Hardware in the loop (HIL) Simulation is a system test with part of the hardware components represented by complex mathematical models or controllers. | |||

*Simulated Hardware in the loop (SimHIL) Simulation is more like HIL, but 'the hardware components' are replaced by simulated models and controllers. | |||

*Processor in the loop (PIL) Simulation is with code running in the microprocessor on the target hardware or board. | |||

*Software in the loop (SIL) Simulation is with code running in the PC, no hardware involves. | |||

[[File: XIL Diagram.png|600px]] | |||

= | = Frequently Asked Questions (FAQ) = | ||

For a list of | For a list of common questions and answers about Raptor-Test, see our Raptor-Test [https://support.neweagle.net/support/home FAQ Page] | ||

= | =New Eagle Raptor-Test Products= | ||

[[File:Dongle.png||thumb|USB license dongle]] | [[File:Dongle.png||thumb|USB license dongle]] | ||

'''License Options''' | '''License Options''' | ||

| Line 46: | Line 111: | ||

|- | |- | ||

|Raptor-Test | |style = "width:300px; text-align: center;" |Raptor-Test Software | ||

| | |RAP-TEST-SW-01 | ||

| | |'''[https://store.neweagle.net/shop/raptor/raptor-software/raptor-test-automated-testing-validation-tool/raptor-test-software-node-lock/ Buy Now]''' | ||

|- | |||

|} | |||

{| class="wikitable" style="text-align: center;" cellpadding = "5" | |||

!scope="col" style = "width:300px;"|Maintenance Product | |||

!scope="col" style = "width:250px;"|Part Number | |||

!scope="col" style = "width:250px;"|Webstore | |||

|- | |- | ||

|Raptor-Test | |Raptor-Test, Annual Software Maintenance | ||

| | |RAP-TEST-SM-01 | ||

|Please contact [mailto:sales@neweagle.net sales] | |'''[https://store.neweagle.net/shop/raptor/raptor-software/raptor-test-automated-testing-validation-tool/raptor-test-annual-software-maintenance/ Buy Now]''' | ||

|- | |||

|} | |||

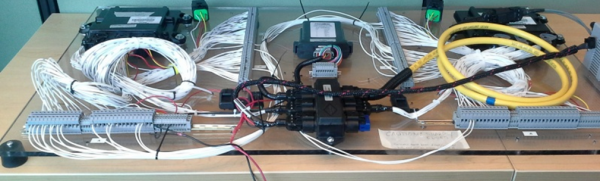

==Custom Automated Testing Bench== | |||

Here is an Automated Testing bench designed and built for a customer. It includes a Module Under Test, a Coordinator Module, and a CAN-to-Analog Module for simulated Hardware-in-the-Loop testing. This bench enables the verification of the specific requirements of the customer's application. | |||

Please [mailto:sales@neweagle.net Contact Sales] for more information. | |||

[[image:NEAT_ActualBench.PNG|600px]] | |||

=Release Notes= | |||

For a list of all Raptor-Test releases and notes on each release, see our release notes [[Raptor-Test-Release-Notes | here]] | |||

==New Eagle Raptor-Test Training== | |||

New Eagle offers training for using the Raptor-Test testing tool. | |||

=====RAPTOR TOOL TRAINING SEAT===== | |||

{|class="wikitable" cellpadding = "5" | |||

!scope="col"|Picture | |||

!scope="col"|For More Information | |||

|- | |||

|style = "width: 50%"| | |||

<gallery>File:RAP-TEST-TRAINING.jpg</gallery> | |||

|style = "width: 50%; text-align: center;"| | |||

'''Please contact [mailto:sales@neweagle.net sales]''' | |||

|} | |||

=====RAPTOR TOOL TRAINING ON-SITE===== | |||

{|class="wikitable" cellpadding = "5" | |||

!scope="col"|Picture | |||

!scope="col"|For More Information | |||

|- | |- | ||

| | |style = "width: 50%"| | ||

| | <gallery>File:RAP-TEST-TRAINING OS.jpg</gallery> | ||

|style = "width: 50%; text-align: center;"| | |||

'''Please contact [mailto:sales@neweagle.net sales]''' | |||

|} | |} | ||

Latest revision as of 17:56, 31 January 2023

New Eagle > Products Wiki > Raptor Platform > Raptor-Test

Introduction

New Eagle has developed an automated testing platform (Raptor-Test) for Motohawk and Raptor™ models that greatly improves the effectiveness and speed of software verification.

Raptor-Test Video Introduction

Automated Testing Introduction (VIDEO)

Raptor-Test Powerpoint Introductory

- Facilitates testing of model-based software against requirements through simulated hardware-in-the-loop (SimHIL)

- Provides an intuitive graphical PC interface for authoring and executing test scripts over a USB-to-CAN interface

- Encourages accumulation of verification infrastructure over project lifetime, allowing validation effort to scale in a write-once run-many fashion

- Allows for increased confidence in production intent software releases.

The following figure shows an example of an Automated Test bench design to verify specific software requirements.

Motivation for Raptor-Test

How do you validate your production control software works properly prior to release?

How do you make sure that you don't break something you've already tested when you implement a new feature?

How do you assure that a service patch (bug fix) doesn't cause other issues?

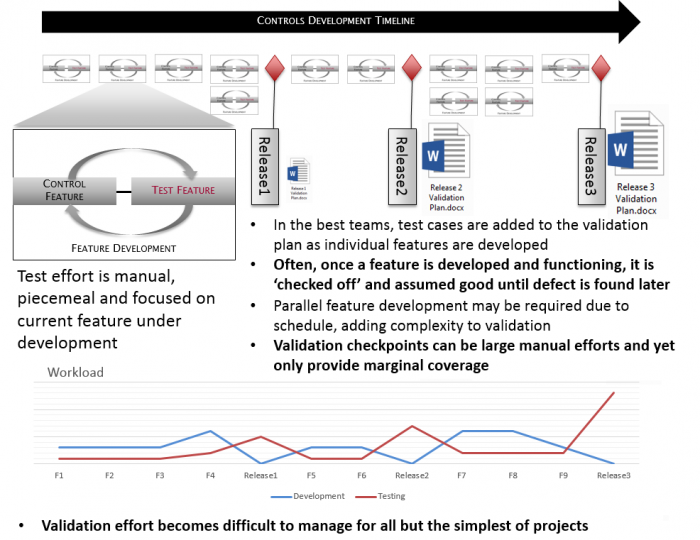

Often, in a controls development program the software is validated empirically over time as the controls functionality is developed, debugged and calibrated. In the typical scenario, controls complexity grows over time, initially focusing on basic operation (does it run), and working on finer-grain features later such as diagnostic messaging and cruise control. The cruise control functionality is developed and tested when it is implemented & calibrated - then the team moves on to other features. The problem that is often encountered with this approach is that changes made to the software in later stages can result in defects in previously implemented functionality. Since the prior functionality was 'checked-out' at an earlier time it is assumed good until an issue manifests at a later point, sometimes in a fielded product. Another issue that arises is in making an update to a fielded product, sometimes after some months/years have gone by - will the change cause other issues? In the best cases, successful production programs take a more rigorous approach to production validation usually involving a lengthy written procedure (test plan) describing a manual checkout process executed against the production intent software/system. This procedure can be brought up at a later time and run against a software build to verify things work properly. Often this procedure is actually printed out and executed by an individual or team against the production intent vehicle to validate the controls software release. The individual(s) executing the test plan will work through the plan by driving the vehicle, pulling sensor wires and/or overriding values in MotoTune to exercise features and inject faults, and writing down pass/fail on the test plan. For a sufficiently complex system the execution of this test plan could take many days of focused effort. This is a time-consuming, error-prone, and expensive method of verifying the function of the production intent software. If you are creating production controls system - does this sound familiar?

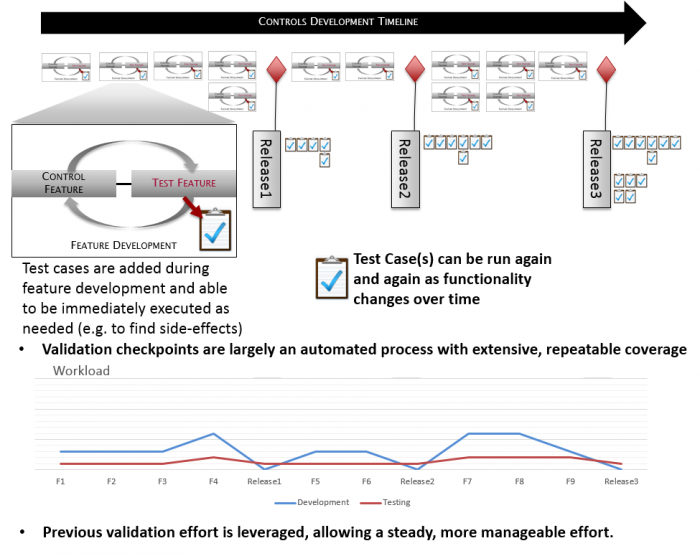

Over the course of a decade of experience with this approach to system validation it never quite felt right. What we found was that there was not a good way to scale our effort in testing the system. As mentioned, a detailed test plan was created in the best cases but even then it was often relatively high-level, and would require significant effort to execute a lot of manual steps. What we were looking for was a way to test something and reuse our effort in testing it later, accumulating tests over time (building a test suite) so that we could gain increased confidence with the system functionality at each release. We needed a way to automate the manual steps so we could dive deeper in our testing to get better coverage. We needed a way to automate test execution so we could scale our validation development effort, allowing us to spend our validation time building the tests - not on running them.

This is why we built New Eagle's automated testing framework, Raptor-Test, which we hope you'll find is an excellent solution to the problem of validating your production controls.

Downloads

| Product Summary | User Manual | Software |

|---|---|---|

|

If you have already purchased a software license, you can download the latest release of the Raptor-Test software at software.neweagle.net. |

Features

New Eagle's Raptor-Test tool provides a friendly interface between your PC and the rest of your testing setup, allowing you to set calibrations and overrides inside a running MotoHawk or Raptor model to verify the required software functions. The AT setup configuration can vary depending on the application's testing requirements. For some applications, the only required test components are: a PC to run Raptor-Test, a Kvaser cable for CAN-to-USB connection, and the Test Module. For other applications, Raptor-Test can interface with both a Test Module and a Coordinator Module via CAN to enable simulation of larger and more complex systems. The Coordinator Module typically runs a plant model, transmitting and receiving simulated sensor and actuation signals between the two modules. These signals can be CAN inputs and outputs or analog/discrete inputs and outputs between the modules. Raptor-Test reads the MotoHawk or Raptor probes to verify that the application software in the Test Module functions as required.

- Scripted tests for reliable, repeatable testing

- Run from the command line, MATLAB scripts, or with an interactive console.

- Send and receive CAN messages

- Set calibrations inside a running Raptor or MotoHawk model

- Read probes from inside a running Raptor or MotoHawk model

- Simulate behavior of the entire system:

- Set override values for digital and analog inputs

- Connect the unit-under-test to a coordinator module running a plant model of the rest of the system

- Generates reports after each test run:

- PDF reports for easy reading

- XML reports for consumption by other software systems

- Utilize Coordinator Module to send CAN messages

- Verify CAN messages

- Test CAN data time out

- Verify logic (for example: Simulink model/State Flow) in the Test Module

- Easy to use Graphical console for building and executing scripts:

Simulated Hardware In the Loop (SimHIL)

Simulated Hardware-in-the-Loop testing (or SimHIL) is a control systems validation strategy using simulated I/O to verify that the software functions match the application requirements. The goal of using a SimHIL approach is to validate software on the production hardware with physical signals to stimulate the module. Simulated I/O can include both CAN transmit and receive as well as analog/discrete inputs and outputs. There are a variety of strategies that can be used to test your software functionality, including setting of software overrides by Raptor-Test over the MotoTune protocol. This allows certain subsystems and states to be methodically triggered and tested. A Coordinator module can be utilized to simulate the analog/discrete I/O or CAN interfaces with other modules in the point of use application. The SimHIL testing setup can be easily tailored to each customer application based on the software requirements.

Difference between 'in-the-loop' Testing

- Hardware in the loop (HIL) Simulation is a system test with part of the hardware components represented by complex mathematical models or controllers.

- Simulated Hardware in the loop (SimHIL) Simulation is more like HIL, but 'the hardware components' are replaced by simulated models and controllers.

- Processor in the loop (PIL) Simulation is with code running in the microprocessor on the target hardware or board.

- Software in the loop (SIL) Simulation is with code running in the PC, no hardware involves.

Frequently Asked Questions (FAQ)

For a list of common questions and answers about Raptor-Test, see our Raptor-Test FAQ Page

New Eagle Raptor-Test Products

License Options

Customers can choose to purchase either a node-locked or dongle-based version of the Raptor-Test software. A dongle is a small USB device the contains the software license. The advantage of a USB dongle is that the software license can easily be passed from one computer to another. A node-locked license, on the other hand, lives on the computer itself and eliminates the need for any external licensing hardware. Node-locked licenses cannot be lost or come lost, which can be problematic for USB license dongles. While node-locked licenses can be transferred from one computer to another, the process is not as simple and fast as removing a USB dongle from one computer and plugging it in to another computer.

Software Updates

Customers have access to the latest software releases for a period of one year after their purchase. Each software release adds new features and address bug fixes. If customers wish to maintain access to new software releases after their first year of using the product, they can purchase a software maintenance license. Software maintenance licenses are valid for 1 year and can be renewed in perpetuity.

| Product | Part Number | Webstore |

|---|---|---|

| Raptor-Test Software | RAP-TEST-SW-01 | Buy Now |

| Maintenance Product | Part Number | Webstore |

|---|---|---|

| Raptor-Test, Annual Software Maintenance | RAP-TEST-SM-01 | Buy Now |

Custom Automated Testing Bench

Here is an Automated Testing bench designed and built for a customer. It includes a Module Under Test, a Coordinator Module, and a CAN-to-Analog Module for simulated Hardware-in-the-Loop testing. This bench enables the verification of the specific requirements of the customer's application.

Please Contact Sales for more information.

Release Notes

For a list of all Raptor-Test releases and notes on each release, see our release notes here

New Eagle Raptor-Test Training

New Eagle offers training for using the Raptor-Test testing tool.

RAPTOR TOOL TRAINING SEAT

| Picture | For More Information |

|---|---|

|

|

Please contact sales |

RAPTOR TOOL TRAINING ON-SITE

| Picture | For More Information |

|---|---|

|

|

Please contact sales |